AI-Driven Quadruped Robot Excels in Badminton

Introduction

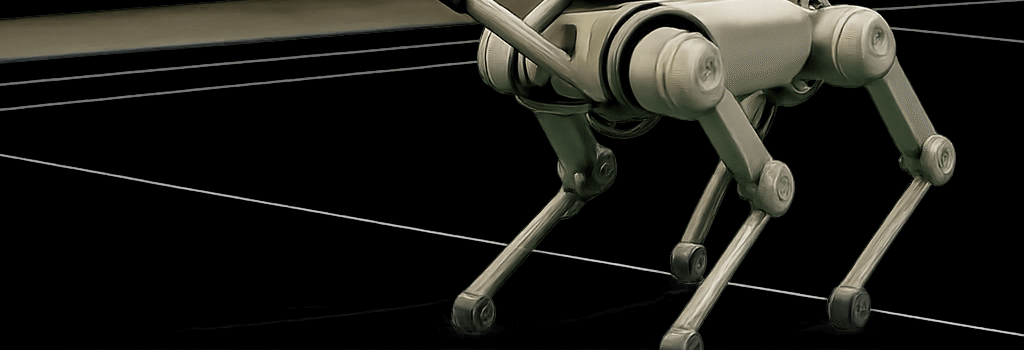

Robotic platforms such as Boston Dynamics’ Spot, Agility Robotics’ Digit, and ANYbotics’ ANYmal have demonstrated remarkable agility and balance. However, translating those locomotion skills into rapid visuo-motor reflexes—necessary for sports like badminton—remains a frontier in robotics. Researchers at ETH Zurich’s Robotic Systems Lab have now built an AI-powered, badminton-playing quadruped that integrates high-performance hardware with deep reinforcement learning to chase and return shuttlecocks.

Robotic Platform and Hardware Specifications

- Base Robot: ANYmal C from ANYbotics—50 kg, four legs with series-elastic actuators, 1.5 kN peak force per leg, compliant design.

- Manipulation Unit: 6-DoF robotic arm by Duatic, 2 Nm joint torque, 1.2 m/s max angular velocity, custom end-effector mounts a standard carbon-fiber badminton racket.

- Sensing Suite: Stereo camera rig (60 Hz @ 640×480 px, 50 mm baseline); IMU (1 kHz); optionally, an event camera prototype (microsecond latency, 1280×720 px) for future upgrades.

- Compute: NVIDIA Jetson AGX Xavier (32 TOPS) running ROS 2, CUDA-accelerated vision pipeline, PyTorch-based policy inference at 100 Hz.

Control Architecture and Learning Framework

Rather than relying solely on classic model-based MPC, the team adopted a model-free reinforcement learning approach. During development:

- They built a high-fidelity digital twin in NVIDIA Isaac Sim, modeling leg compliance, actuator dynamics, and racket mass distribution.

- They used Soft Actor-Critic (SAC) with domain randomization over shuttlecock mass (4.75–5.5 g), air drag coefficients, and joint friction.

- Training spanned 200 million environment steps across 10,000 episodes, each episode requiring six consecutive returns.

- An auxiliary perception network (ResNet-18 backbone) was co-trained to predict 3D shuttle trajectories from stereo input with Gaussian noise augmentation.

Performance Evaluation

“The goal was to fuse perception and body movement with human-like reflexes,” remarked Dr. Yuntao Ma, lead roboticist at ETH Zurich.

In lab trials against intermediate human players, ANYmal achieved a consistent ~60% success rate on gentle rallies. Key observations:

- Reaction Time: ~350 ms from shuttle release to control command, compared to 120–150 ms in elite athletes.

- Movement Strategy: Learned center-court positioning post-return, mirroring human tactics.

- Risk Management: Fall-avoidance reflex and safe torque limits prevented self-damage during high-speed lunges.

Technical Challenges

Perception vs. Motion Blur

High-speed locomotion induces camera shake and motion blur, degrading object tracking. The stereo rig’s 60 Hz frame rate and rolling shutter introduced up to 10 cm localization error at 4 m/s swing speed.

Compute Bottlenecks

Jetson Xavier’s onboard GPU processes vision and control networks at 100 Hz, but end-to-end latency peaks at 50 ms, introducing lag into the closed-loop control.

Hardware and Perception Upgrades

- Event-Based Vision: Integrating Prophesee Gen4 event sensors for µs-scale latency and no motion blur, improving shuttle detection at high angular speeds.

- Edge AI Acceleration: Upgrading to NVIDIA Jetson Orin NX (100 TOPS) or dedicated TPU modules to halve inference latency.

- High-Torque Actuators: Next-gen series-elastic actuators with 2 kN output and 1 kHz control loops to reduce joint lag.

Expert Opinions on Low-Latency Sensing

Dr. Mikael Lund, senior engineer at a leading robotics firm: “Event cameras will revolutionize closed-loop sports robotics by cutting perception latency by an order of magnitude. Coupled with predictive models of opponent kinematics, reaction times below 100 ms are within reach.”

Future Directions and Applications

The ETH Zurich framework—balancing perception noise and control agility—extends to:

- Dynamic warehouse logistics: high-speed pick-and-place in cluttered, moving environments.

- Search-and-rescue: agile navigation in debris-filled zones with unpredictable obstacles.

- Human-robot collaboration: passing and catching tasks in manufacturing lines.

Conclusion

While ANYmal’s current badminton performance remains at an amateur level, the integration of advanced vision sensors, high-performance compute, and deep reinforcement learning marks a significant step toward real-world, reflex-driven sports robots. Future upgrades in sensing and actuation could narrow the gap to human reflexes, opening new frontiers in agile robotics.

Reference: Ma, Y. et al. “Visuomotor Skill Learning for Agile Quadrupeds.” Science Robotics, 2025. DOI:10.1126/scirobotics.adu3922.