Mattel’s AI Toys: Playtime or a Digital Concern?

Barbie Dream World or AI Nightmare?

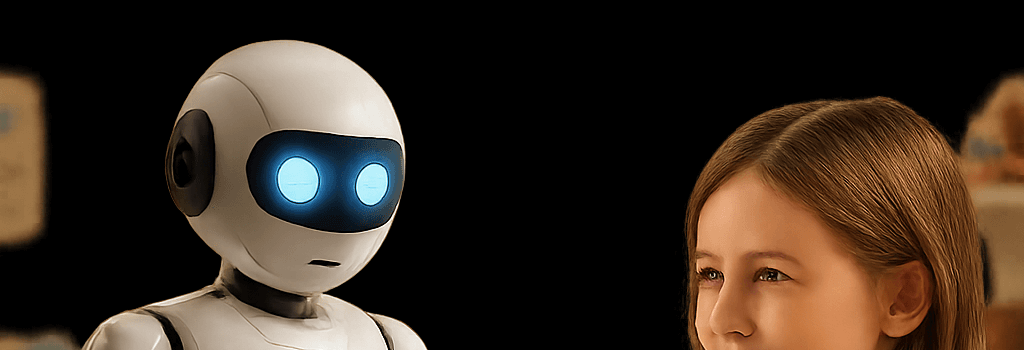

After Mattel and OpenAI announced a landmark partnership to embed conversational AI into children’s toys, consumer advocates and technical experts have sounded alarms about potential developmental, privacy, and security risks. As Mattel teases AI-enhanced Barbie and Hot Wheels lines, many parents and regulators are demanding clear answers on how these smart toys will function under the hood, how data will be handled, and what safeguards will be in place.

Transparency and Safety Concerns Raised by Public Citizen

On June 17, 2025, Public Citizen co-President Robert Weissman issued a public statement urging Mattel to disclose its AI toy design principles before any product ships. Weissman warned:

Endowing toys with human-seeming voices that engage in free-form conversation risks inflicting real damage on children’s social development and imaginative play. Toys that converse like peers may undermine real-world relationships and cause confusion between reality and play.

Weissman also called on Mattel to draw firm red lines—particularly a ban on AI deployments for children under 13—and to commit to independent third-party audits of both safety and privacy.

Mattel and OpenAI’s Public Statements

In their joint press release, Mattel and OpenAI were intentionally vague:

We will support AI-powered products and experiences based on Mattel’s iconic brands. The first release will be announced by year-end, with a full launch expected in 2026.

Both companies claim they will adhere to all COPPA (Children’s Online Privacy Protection Act) and GDPR requirements, implement age-appropriate content filters, and prioritize data minimization and encryption. However, no specific technical details—such as whether natural language processing occurs in the cloud or at the network edge—have been disclosed.

Technical Architecture of AI-Driven Toys

Industry insiders suggest the AI toys will combine an embedded ARM Cortex-A7 microcontroller with a Wi-Fi or Bluetooth LE module to connect to the cloud. Key components include:

- Natural Language Understanding (NLU): Voice inputs are captured by a MEMS microphone, digitized at 16-bit/16kHz, and transmitted over TLS 1.3 to GPT-4-class models running on OpenAI’s servers.

- Natural Language Generation (NLG): Responses are generated with a 32k token context window, allowing multi-turn conversation but also raising latency and bandwidth concerns.

- On-Device Edge Cache: A local SQLite database may store sanitized interaction logs for offline replay, requiring robust encryption-at-rest (AES-256) and secure key management.

Latency targets under 300ms will be critical to maintain conversational flow, but real-world Wi-Fi conditions could introduce jitter, potentially disrupting the play experience.

Regulatory Landscape and Compliance

Smart toys collecting voice and behavioral data from children under 13 fall squarely under COPPA. Mattel must obtain verifiable parental consent,:

- Disclose what categories of data are collected.

- Provide parents with the right to review or delete their child’s data.

- Implement age screening and enforce OpenAI’s own policy prohibiting under-13 usage.

Meanwhile, global regulations such as the EU’s GDPR-K (the child-focused extension) and California’s CCPA impose additional transparency and data minimization requirements. Noncompliance can result in fines up to 4% of global revenue.

Data Security and Privacy Controls

Experts recommend several best practices:

- End-to-End Encryption: Use TLS 1.3 with Perfect Forward Secrecy for all voice and token exchanges.

- Parental Control Dashboard: A companion mobile app should allow parents to whitelist topics, set daily interaction limits, and scrub stored logs.

- Independent Audits: Enlist third-party security firms (e.g., CyberAB or CURE53) for periodic penetration testing and privacy impact assessments.

Expert Opinions on Risks and Benefits

On LinkedIn, AI ethicist Varundeep Kaur highlighted potential gains—like personalized language learning—but warned of latent biases in large language models that could reproduce stereotypes or culturally insensitive content. Cyber safety specialist Adam Dodge of EndTab pointed to documented cases where chatbots inadvertently encouraged self-harm, underscoring the need for rigorous content filtering and human-in-the-loop review for flagged interactions.

Litigation and Intellectual Property Concerns

Beyond child welfare, Mattel faces potential copyright disputes. Hollywood studios recently sued an AI image generator for unauthorized use of character likenesses. Similarly, AI-powered Barbie that echoes trademarked slogans or catchphrases without licensing could prompt costly litigation. Mattel must ensure its fine-tuning datasets exclude unlicensed copyright-protected material.

Future Outlook and Best Practices for AI-Driven Play

To foster public trust and safe adoption, industry stakeholders recommend:

- Publishing a Responsible AI Toy Charter including ethical guidelines, safety thresholds, and performance benchmarks.

- Engaging child development psychologists early in the design cycle to assess social and cognitive impacts.

- Collaborating with regulators such as the Federal Trade Commission to pilot voluntary certification programs.

While the promise of interactive, learning-oriented play is compelling, Mattel and OpenAI must resist rushing to market without robust technical and policy guardrails. The coming months will reveal whether this collaboration becomes a beacon for responsible AI in toys or a cautionary tale of innovation outpacing oversight.