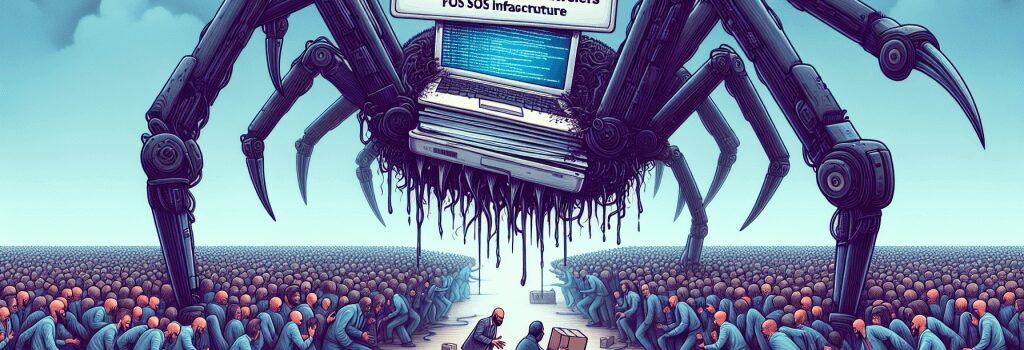

Open Source Under Siege: The Impact of AI Crawlers on FOSS Infrastructure

The Great Flood of AI Crawlers

For many open source developers, a new crisis is unfolding as AI-driven crawlers, originally designed for data collection, are now unintentionally transforming into distributed denial-of-service (DDoS) like forces against community-powered websites. These sophisticated bots mimic legitimate users by spoofing user-agent strings, rotating through residential IP addresses, and evading conventional security measures.

Software developer Xe Iaso reached a breaking point when his Git repository service was overwhelmed by aggressive bot traffic. Even after updating the robots.txt file, blocking known crawler user-agents, and filtering suspicious traffic, the relentless AI crawlers continued their invasion. Iaso’s experience is increasingly common across the open source landscape and highlights a systemic vulnerability exploited by modern AI crawlers.

Innovative Defensive Strategies and Their Trade-Offs

In response to the escalating crisis, several developers have implemented cutting-edge defenses. Iaso developed a custom proof-of-work challenge system called “Anubis,” which forces every visitor’s browser to solve computational puzzles before accessing the site. This system, while effective at deterring bot traffic, also inadvertently imposes delays on legitimate users—sometimes up to two minutes during peak times.

Similarly, Fedora Pagure’s sysadmin team had to block entire regions, such as Brazil, when standard mitigation techniques failed to check AI crawler traffic. KDE’s GitLab infrastructure also faced significant downtime when traffic from Alibaba IP ranges overloaded the system. Such drastic measures underscore the severe repercussions of unmanaged crawler assaults on critical public resources.

Technical Deep Dive: How AI Crawlers Operate

At the heart of this challenge are the evolving techniques of AI crawlers. Detailed technical analysis reveals the following methods that make these crawlers so resilient:

- Spoofing Capabilities: Bots dynamically change their user-agent strings and utilize residential proxy networks, making it difficult for traditional filters to distinguish them from real users.

- Persistent Crawling Patterns: Reports indicate that many crawlers repeatedly visit the same layers of content—such as every page of a Git commit log—on a cyclical basis (often every six hours) to continuously refresh their training datasets.

- Economic Impact: The Read the Docs project observed a dramatic 75% decline in traffic once AI crawlers were blocked, which translated to savings of approximately $1,500 per month in bandwidth costs.

Deeper Analysis: Economic and Ethical Dimensions

The economic burden imposed by these crawlers is significant, especially for FOSS projects that operate on tight budgets. Smaller projects are now forced to contend with rising bandwidth costs and infrastructural strain that large-scale, data-hungry corporations inadvertently cause. Tech experts argue that this is not merely a technical problem, but also an ethical one—raising questions about fair data usage practices and consent in the AI landscape.

Many in the community, including developers and sysadmins, have voiced concerns that AI companies are leveraging vast capital (with some estimates citing companies owning over $100bn in resources) to extract data without appropriate collaboration or compensation. The ethical dilemma is compounded by the fact that, while small-scale open source projects bear the cost, much larger corporations remain largely unaccountable for the collateral damage their crawler operations cause.

Emerging Solutions: Tarpits, Labyrinths, and Collaborative Projects

In the wake of these challenges, innovative security defenses have begun to emerge. One such solution is the tool “Nepenthes,” developed by an anonymous security researcher known as Aaron. This tool creates digital tar pits—endless mazes of fake content—that force bots to waste their computational resources, thereby indirectly penalizing aggressive data collectors. Cloudflare has also entered the fray with its “AI Labyrinth” feature, which redirects unauthorized crawler traffic to dynamically generated AI pages designed to consume bot resources rather than block them outright.

Additionally, community-driven initiatives like the “ai.robots.txt” project offer standardized files that help enforce the Robots Exclusion Protocol and even supply .htaccess configurations that return error pages when recognizing AI crawler requests. These collaborative approaches are emblematic of the wider push within the open source community for a more ethical and sustainable model of data harvesting.

Future Outlook: Navigating Regulatory and Community Responses

Looking forward, many industry experts predict an escalation in the arms race between data-hungry AI crawlers and the defensive measures employed by the open source community. As this conflict intensifies, there is an increasing call for regulatory oversight and industry standards to ensure that AI companies operate in a manner that is both transparent and respectful of public digital infrastructure.

Regulators and policymakers may soon need to step in to establish clear guidelines for data scraping activities, ensuring that no single entity can impose unfair economic or operational burdens on the digital commons. Until such frameworks are in place, collaborative efforts among developers, security experts, and even AI firms will be crucial in maintaining the integrity of essential online services.

Concluding Thoughts

The surge of AI crawler traffic serves as a stark reminder of the double-edged nature of technological advancement. On one hand, these crawlers enable robust data collection and rapid training of cutting-edge AI models; on the other, their unchecked proliferation endangers the very foundations of open source infrastructure. With key public resources at stake, fostering a collaborative dialogue between AI companies and the open source community is not only advisable but necessary to protect the digital ecosystem.

In an era where technology continues to reshape every facet of our digital world, balancing innovation with ethical responsibility will be the key to ensuring a sustainable and secure future for all online communities.

Source: Ars Technica